Energy Transition Investment Passes Petro, Generalized Artificial Agents & Intel's Humbling

Week 16

Dose of Hope & Innovation in Atoms: science, medicine, & engineering

A Chinese company claimed to have developed miniaturized atomic energy batteries that can power your phone for decades. Production supposedly starts next year. Won’t believe it til I see but big if true. Meanwhile, more countries are following China’s lead in actively exploring decarbonizing containerships by powering them with the latest SMR nuclear reactor designs.

Nature’s seven technologies to watch in 2024

A new wearable device prototype from the Caltech professor that made the female hormone sensor combines enzymatic analysis of sweat biomarkers with physiological signals like skin temperature to estimate stress

FDA Approves AI Software for Alzheimer's Prediction: The FDA approved "BrainSee," an AI software by Darmiyan, predicting Alzheimer's disease (AD) progression in individuals with mild cognitive impairment. Utilizing MRI data and cognitive test scores, BrainSee estimates AD dementia risk within five years, with an accuracy of 88% to 91%.

Gene Therapy Enables Hearing in a Child: Akouos, acquired by Lilly, developed AK-OTOF, a gene therapy that successfully treated an 11-year-old boy with congenital hearing loss due to a mutation in the otoferlin gene. The therapy, involving a dual AAV-based system, restored hearing across all frequencies within 30 days. This breakthrough establishes gene therapy as a viable treatment for congenital hearing loss and opens possibilities for treating a range of sensorineural hearing conditions. (Decoding Bio)

CZI is studying the cellular basis of memory in animal models, hoping to test whether that can lead to therapeutic manipulation in neurodegenerative diseases

First-ever RNA editing trial in US gets clearance to treat a type of inherited vision loss via exon editing: “Administered via AAV, Ascidian's exon editing strategy aims to treat the disease, which is associated with over 1000 mutations. These are too many mutations to create individual treatments for, and the gene involved is too large for delivery. By hijacking the RNA splicing process, the company believes it can treat the disease where most other therapies cannot.”

“Combined small-molecule treatment accelerates maturation of human pluripotent stem cell-derived neurons”

Eleven US states now get over half their electricity from renewables (and 10 of them have below-average electricity prices)

CATL aims to start delivering the fastest charging EV battery in a couple months. It achieves 250 miles of charge in 10 mins and its max 430 miles in 15 min.

Energy Transition Investment Passes Petro

BloombergNEF released a good report on energy transition financing. Here are my key takeaways:

$1.1Trillion (with a T!) invested in 2022, passing petro investment for the first time

But that’s still 3x less than what’s needed moving forward to meet BNEF’s Net Zero Scenario

China by FAR the largest contributor, US / EU distant 2nd

The Land of Bits: software, silicon, algorithms, computation, & robotics

A Harvard x QuEra team achieved 48 *logical* qubits in a quantum computer design, beating Google’s previous record by 4x. Major step for quantum.

Jim Fan gave a good TED talk on his effort to build a foundational agent that scales across different realities, arguing that if it can master 10,000 diverse digital realities (e.g. he made major advancements in training an AI model to play Minecraft and is working on training virtual avatars karate at simulated speeds many times reality), then it may well generalize to the 10,001st reality: our physical world.

UC Berkeley robotics showed that reinforcement learning methods are improving such that robotics can learn to walk, grasp something, etc. from raw images many times faster than before (as little as 15-30 min). Meanwhile, Carnegie Mellon researchers released a A full-stack approach for manipulating objects in open-ended unstructured environments (think: unlocking doors with lever handles/ round knobs/ spring-loaded hinges, opening cabinets, drawers, and refrigerators) using RL. The models adapts online, updating its model to formerly unsee objects, and learns to operate it in under an hour without new demos. The framework doubled performance. It operates on hardware costing ~$25K.

RWKV released its latest attention-free model. It’s 7B parameters and trained on 1T tokens (meaningfully less than similarly sized models from Meta and Mistral). Adjusting for the training data size, it beats even Mistral on multi-lingual language modeling. It claims 10-100x lower inference than attention-based models. It beat RWKV-4 by 4% on the same dataset. They’ll be doubling the training dataset size next.

ByteDance researchers built a foundation model for depth estimation from a single image that’s on par with LiDAR, meaning we only need cameras for autonomous vehicles:

Google released a diffusion model achieving a remarkable sub-second inference speed for generating a 512 × 512 image on mobile devices. Meanwhile, Alibaba released a mobile device multi-modal agent model that can “accurately identify and locate both the visual and textual elements within the app's front-end interface. Based on the perceived vision context, it then autonomously plans and decomposes the complex operation task, and navigates the mobile Apps through operations step by step.” And Apple released a method to more efficiently train small models:

Google released a new text-to-video diffusion model that “uses a "space-time" neural network to generate clips in a single pass, as opposed to other models that create distant keyframes. Essentially, this avoids the ‘choppiness’ seen in other generations” (link)

China’s top models at least on the face of it appear to be catching up to the US’s best models of 2023 despite sanctions. Meanwhile, Gina Raimondo is considering forcing US cloud providers to do KYC to try to block Chinese firms from developing their models that way.

China’s CXMT is racing to make the country’s first high bandwidth memory

ASML bookings clarify any doubt that the semiconductor industry has bottomed (Microsoft and Google also guiding capex spend higher):

Below is a view from the side of a semiconductor slice in half. The ticks at the bottom aren’t that much wider than an atom and are what perform the computation that powers all our electronics:

New material discovered for semis:

Researchers at Stanford have demonstrated that a new material may make phase-change memory – which relies on switching between high and low resistance states to create the ones and zeroes of computer data – an improved option for future AI and data-centric systems. Their scalable technology, as detailed recently in Nature, is fast, low-power, stable, long-lasting, and can be fabricated at temperatures compatible with commercial manufacturing. “We are not just improving on a single metric, such as endurance or speed; we are improving several metrics simultaneously. This is the most realistic, industry-friendly thing we’ve built in this sphere.”

Today’s computers store and process data in separate locations. Volatile memory – which is fast but disappears when your computer turns off – handles the processing, while nonvolatile memory – which isn’t as fast but can hold information without constant power input – takes care of the long-term data storage. Shifting information between these two locations can cause bottlenecks while the processor waits for large amounts of data to be retrieved.

“It takes a lot of energy to shuttle data back and forth, especially with today’s computing workloads,” said Xiangjin Wu. “With this type of memory, we’re really hoping to bring the memory and processing closer together, ultimately into one device, so that it uses less energy and time.”

There are many technical hurdles to achieving an effective, commercially viable universal memory capable of both long-term storage and fast, low-power processing without sacrificing other metrics, but the new phase change memory developed in Pop’s lab is as close as anyone has come so far with this technology.

The memory relies on GST467, an alloy of four parts germanium, six parts antimony, and seven parts tellurium, which was developed by collaborators at the University of Maryland. Pop and his colleagues found ways to sandwich the alloy between several other nanometer-thin materials in a superlattice, a layered structure they’ve previously used to achieve good nonvolatile memory results.

“The unique composition of GST467 gives it a particularly fast switching speed,” said Asir Intisar Khan. “Integrating it within the superlattice structure in nanoscale devices enables low switching energy, gives us good endurance, very good stability, and makes it nonvolatile – it can retain its state for 10 years or longer.” Finally, it can switch states at a few tens of nanoseconds while operating below one volt, which is a big deal.

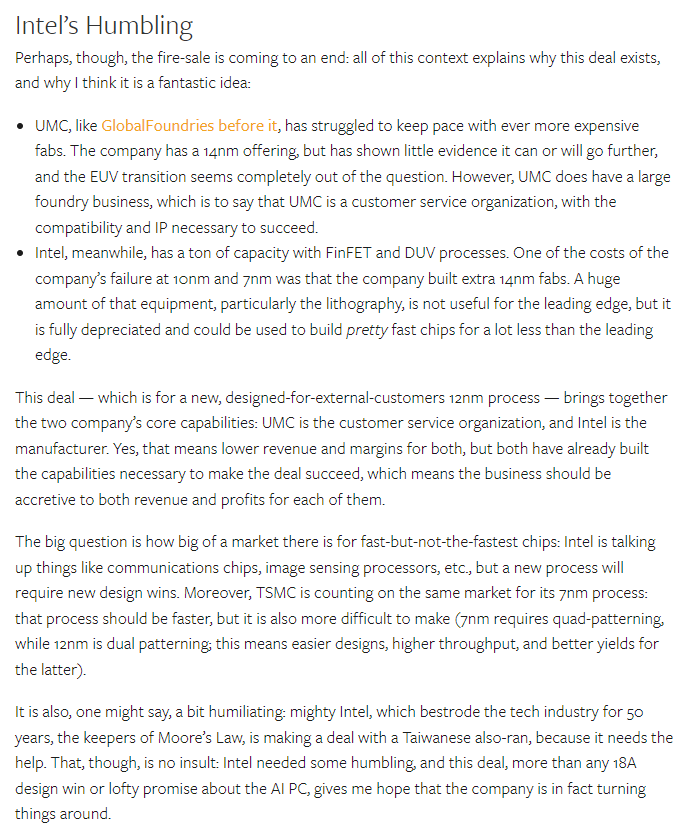

Intel’s Humbling

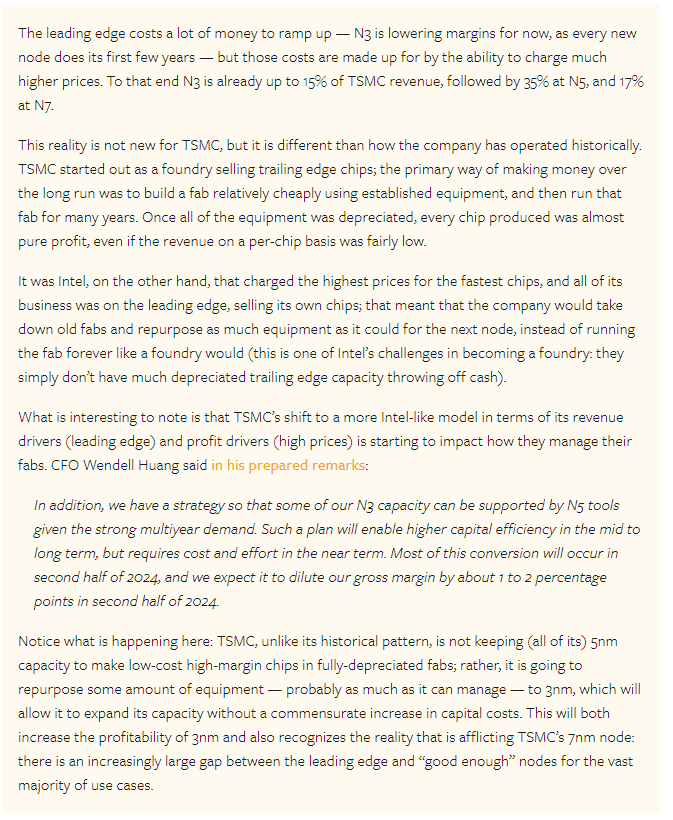

Good Stratechery article on Intel’s long-needed transition to a foundry business model where they make semiconductors designed by other firms (what TSMC pioneered) in addition to their own fully integrated chips. The newish CEO is also pushing to regain leading edge node competitiveness “if you believe the company’s claims about its 18A process, which is the fabled fifth of the “five nodes in four years” that Gelsinger promised shortly after he took over, and it appears that he is pulling it off:”

Stratechery also talked about how the dynamics of the foundry business will make for a handful of precarious years:

C.R.E.A.M: macro, markets, commodities, and geopolitics

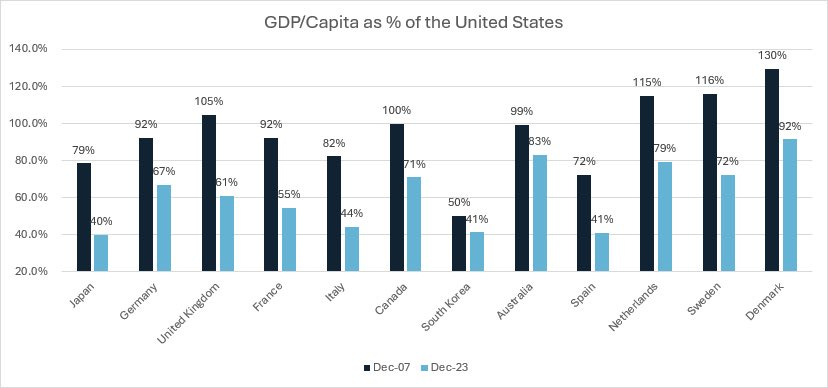

The US has dominated the last 15 years of growth, particularly stagnant is Europe (though note that it’s a bit less extreme when adjusted for currencies):

The US economy continues ripping! Atlanta Fed’s GDPNow tracker estimates current real growth at 4.2%. And results for December blew away expectations:

“Markets have a dovish read on yesterday's Fed, adding 14 bps to cuts priced by end-2024 (top left), for a total of 150 bps in cuts this year. The other G10 also got more cuts priced, except for ECB, which markets think will lag. That's why inflation break-evens are the ECB trade.” (Robin Brooks). The above data will likely reverse the constant buying pressure on USTs since October.

IMF doubles Russian GDP growth expectation to 2.6%. Meanwhile, “Russia's imports increased by 10% in 2023 to $74 billion, reaching their pre-war level, with a rise in Asian and especially Chinese supply offsetting the drop in supply from Europe. Also suggests less room for China exports to Russia to rise this year”

Good thread explaining why the economy didn’t crash despite weak credit growth and money supply growth: it was “truly income-driven expansion where the velocity of money is "financing" the expansion.”

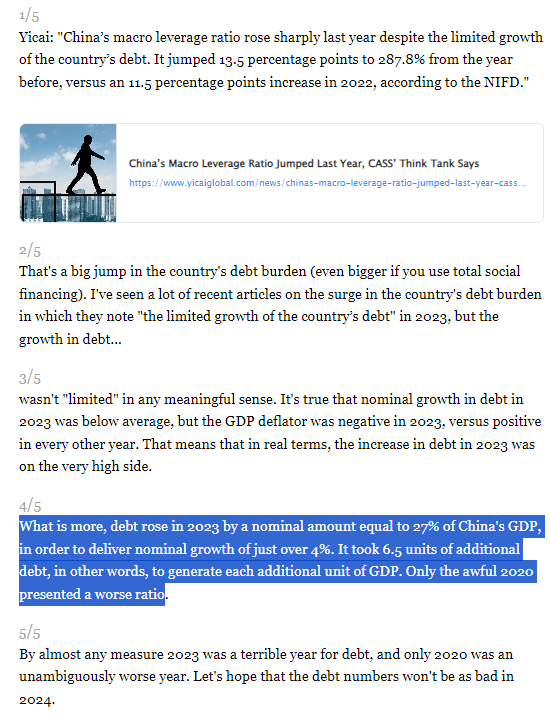

China’s debt-fueled growth strategy is running into its limits:

Uncontrolled immigration may be the biggest issue for Biden’s reelection:

Note that the Republicans are maximizing the political opportunism by simultaneously blocking Biden by from passing policies to fix this error and running a national media campaign against him for the policy failure